Data Storage Strategy: What is High-Performance Object Storage?

If you’ve only considered object storage at for secondary applications, such as archiving and backup, it might be time to re-examine the landscape of data management, as you may be overpaying for on-premises performance-driven storage. While it’s true that early object storage adoption had historically been associated with backing up large volumes of secondary unstructured data on cloud service providers, recent innovations in flash media and advances in storage hardware and software have boosted performance and data integrity for software-defined storage platform offerings.

Previously, we covered the benefits of capacity-based object storage and how organizations can benefit when compared to the high OPEX cost of a static architecture data center. Next, we’ll discuss how object storage performance has been boosted to better meet data through-put needs of organizations that want to process data closer to where it is stored.

Evaluating high-performance object storage solutions? Here’s what data center administrators need to know to compare composable infrastructure vs. virtualization or container-based infrastructure. Questions? Drop us an email at

High-Performance Object Storage Solutions

Higher-capacity NVMe flash-based SSDs have has replaced low-cost spinning disk, while erasure coding offloaded to storage media have improved system performance and increased data integrity. Additionally, NVMe-over-Fabric has eliminated the through-put challenges of storing warm data on direct-attached storage. These technologies allow more of the data to stay in the object store, rather than copy it to a local file-based or block storage.

The performance of on-premises object storage is better able to meet the needs for organizations that want to process data close to where it is stored. As a result, widespread object storage adoption has broadened to support use cases with ‘warm’ data access characteristics – including content delivery, artificial intelligence (AI), internet of things (IoT), and big data and analytics – as well as high-throughput demands in backup and restore.

OSNEXUS QuantaStor

One performance-built object storage provider that Pogo Linux has partnered with is OSNEXUS. The Bellevue, Wash. company’s QuantaStor software-defined storage platform was developed to deliver a turn-key enterprise compute cluster on-premises, at the edge, or in the cloud with the ability to scale-up and scale-out. Based on a hardware-agnostic approach, QuantaStor bare-metal installs onto standardized Pogo Linux servers and to turn them into high-performance object storage systems at petabyte-scale.

Through more efficient data management and IT agility, OSNEXUS QuantaStor dramatically simplifies storage management and automation. Data center organizations can realize a cost savings upwards of 50% through the lack of time and other costs of additional software to set-up or maintenance.

🌐 Why Storage Grids Matter

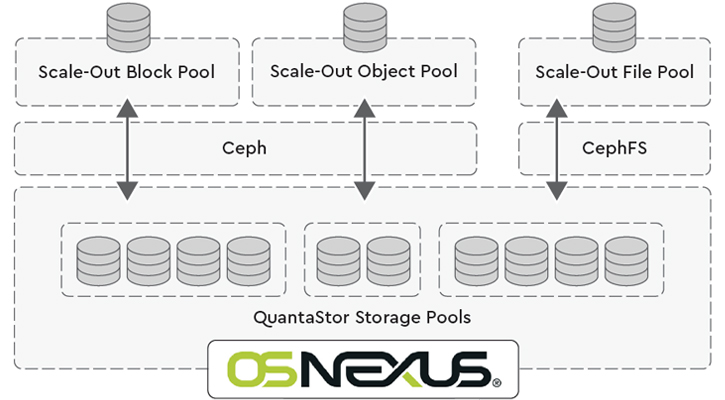

QuantaStor’s biggest differentiator in the advanced OSNEXUS storage grid technology which can combine and manage multiple storage appliances across sites – including public and private clouds – in a single unified storage platform, at scale. With a federated data management system, the built-in storage grid supports all major file, block, and object storage protocols, including iSCSI/FC, NFS/SMB, and Amazon S3.

📋 Ceph & ZFS Under the Hood

QuantaStor integrates ZFS and Ceph, so users can deploy and manage mutiple compute nodes, and expand storage clusters with little or no knowledge. The OSNEXUS storage grid manages the underlying Ceph technology end-to-end – automating self-healing and self-managing best-practices within the platform – to minimize administration and ensure deployments are set-up correctly.

With an integrated solution design, Ceph replicates data and makes it fault-tolerant, while QuantaStor’s hardware management actively monitors key elements on Pogo Linux systems, including power supply, fans and thermal temperature, and HDD health. At the same time, QuantaStor’s integration with Amazon S3 provides public cloud options for multi-site management or setting up replication schedules.

⚡ NVMe Storage Pools with NVMe-oF

Data center operators who operate workloads that demand high performance and low latency will realize tremendous advantages by shifting end-to-end to NVMe flash-based storage arrays connected to storage via NVMe-over-Fabric (NVMe-oF) instead of traditional networking connections. Fortunately, QuantaStor supports highly-available NVMe storage pools for all storage types, including SAN, NAS and object storage, with support for NVMe-over-Fabrics. With WD NVME integration in head node and JBOF for direct-attached storage add-on, QuantaStor is able to deliver high-performance compute and dramatically improved object storage at scale for on-premises applications.

💪 Storage Integrations

OSNEXUS has partnered with leading backup, devops and monitoring companies to test and validate QuantaStor for mission-critical environments. Integrations with Veeam for backups, Docker for containers, PagerDuty for monitoring and other enterprise-ready APIs, enables QuantaStor appliances built on Pogo Linux servers to work together with a variety of workflows to solve complex challenges.

The ability to accelerate time-to-market for digital products, or improve service-levels and project delivery times, is a competitive advantage that’s necessary for any data-driven organization. A comprehensive software-defined composable infrastructure framework can providers data center admins the ability to configure, manage and scale out physical bare-metal server systems in seconds.

Setting up an Object Storage Proof of Concept

Hosted applications will drive future adoption of composable infrastructure, and proof of concepts (POC’s) are the best way to evaluate a composability investment against a converged or hyperconverged environment. More often than not, the right solution for your data center is a mix of composability alongside a static architecture to experience the greatest platform flexibility, scalability, and unit economics.

IT decision makers should lay out rigorous performance, resiliency and scalability requirements. Additionally, knowledge of the hosted application requirements is necessary to ensure a POCs provide clear, decisive results. To objectively measure technology and operational benefits, it’s possible to start small and build out as you go. Most vendors composable infrastructure will happily help you set up a proof-of-concept solution to test out the design set-up that best meets your needs.

Choosing a Performance-built Object Storage Solution

Low latency or high transfer rates are of little benefit if they swamp the target application. While these systems generate IOPS approaching the millions, the reality is that there are very few workloads that require more than the performance of these systems. However, there is an emerging class of workloads that can take advantage of all the performance and low latency of an end-to-end NVMe system.

If you’d like to learn more about how to maximize your data center infrastructure by up to 90% while minimizing its footprint, give us a call at (888) 828-7646, email us at or book a time calendar to speak. We’ve helped organizations of all sizes deploy composable solutions for just about every IT budget.